Automatic Hyperparameter Optimization (HPO) has proven its usefulness across various applications, but users still prefer to retain control over the selected hyperparameters. To tackle this, we recently proposed a new approach for Dynamic Bayesian Optimization (DynaBO) that allows users to exert dynamic control visualised below.

Motivation for DynaBO

While Hyperparameter optimization is great at finding well-performing hyperparameters, users are treated as spectators. They start the optimization process, go for a coffee, and hope the HPO tool finds a well-performing hyperparameter configuration. If unsuccessful, end users restart an adapted optimization process. Iterating this process multiple times can lead to frustration and unhealthy caffeine intake. To reduce caffeine consumption, we propose DynaBO. Our methodology allows online control of an ongoing optimization process by steering towards user-defined areas. To address the negative effects of potentially misleading priors that may result from working late to meet a deadline or caffeine jitters, we also propose a prior-detection and, possibly, rejection criterion.

Steering the Optimization Process with Priors

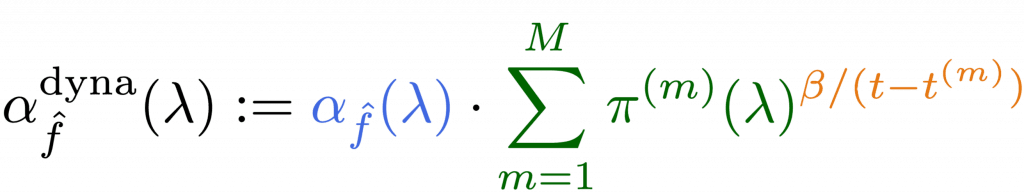

BO begins with an initial design that aims to diversely cover the hyperparameter configuration space. BO then proceeds, alternating between two main steps: (i) fitting a probabilistic surrogate model to the observed evaluations, and (ii) selecting the next configuration based on the acquisition function .

To dynamically steer the optimization process, we weight the acquisition function with a prior distribution. Over time, the effect of the prior decays, and the surrogate takes over control. Concretely, we generalize Hvarfner et. al (2023)’s BO. To that end, we adapt the acquisition function by incorporating distribution-shaped user priors provided at the timesteps and decayed individually. If multiple priors are provided, their effects are summed.

Rejecting Misleading Priors

To safeguard against misleading priors that may slow the optimization process, we also propose an additional prior-detection to notify if misleading priros are provided. To that end, DynaB samples configurations from the prior distribution and the area around the current incumbent , and then assesses their quality using an acquisition function . If the difference exceeds a threshold, the prior is detected as possibly misleading.

To dive deeper into the math, some additional proofs, and the experimental results, you can read the full paper on arXiv:2511.02570.

Further References

Hvarfner, C., Stoll, D., Souza, A., Nardi, L., Lindauer, M., and Hutter, F.

πBO: Augmenting Acquisition Functions with User Beliefs for Bayesian Optimization. In The Tenth International Conference on Learning Representations (ICLR’22). ICLR, 2022. 2204.11051

Comments are closed