Submitted by Mehraveh Javan Roshtkhari, Matthew Toews and Marco Pedersoli as part of the AutoML’25 conference

The architecture of a neural network (number of layers, connections, operations etc.) has a great impact on its performance: a well-designed architecture can lead to significant improvements in a model’s accuracy and efficiency. Neural Architecture Search (NAS) is an automated process for designing the architecture of a neural network that explores in an efficient way combinations of basic building blocks (search space) to find the best architecture rather than relying on manual expert design. The set of possible architectures are often very vast, posing a great challenge for NAS and making the choice of search algorithm crucial on the quality and efficiency of NAS process.

Monte-Carlo Tree Search (MCTS) for NAS

MCTS is a powerful search algorithm widely used for sequential decision-making problems, such as in games like chess and Go. MCTS works by building a search tree and strategically exploring the tree. MCTS is effective for NAS as it efficiently partitions the vast NAS search space into a tree-like structure, allowing for a dynamic balance between exploring new architectural options and exploiting promising ones.

However, a critical insight into the MCTS process is that the order and structure of the tree are essential to how efficiently the search space is explored. The probability of finding the best solution is heavily affected by the tree’s layout. If the arrangement of the tree is poor, the algorithm can get stuck exploring unpromising areas, wasting significant time and resources before finding a good solution.

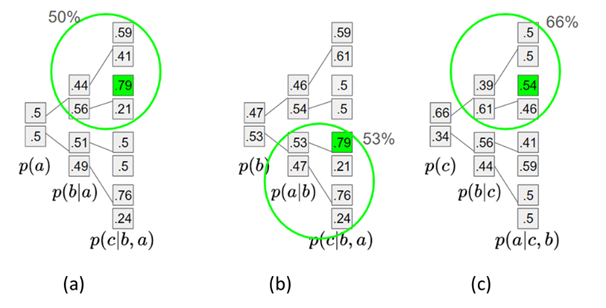

To give an example, consider the tree shown above. The goal is to find the single best architecture, represented by the highlighted leaf. In the provided image, we see three different tree structures (a, b, and c). The gray nodes represent paths and their sampling probabilities. In structure (a) the optimal solution is positioned within the tree in a way that requires a lengthy exploration of many branches before it’s discovered. While structure (c) assigns a higher probability to the branch leading to the best architecture, meaning it requires significantly less exploration to find the solution.

This insight provides the core motivation for this research: we aim to learn the search tree, but also to jointly optimize the search tree’s structure itself to accelerate the NAS process. Since the goal is to find the highest-performing architecture, the most straightforward criterion for optimization is the estimated performance of each architecture. We can then group architectures based on their performance to construct a more efficient search tree.

🔄 Iterative MCTS: A Self-Improving Approach

A major challenge is that an architecture’s performance estimate is not perfect and can change throughout the search and training process. A static, pre-defined tree can quickly become suboptimal, leading to wasted effort.

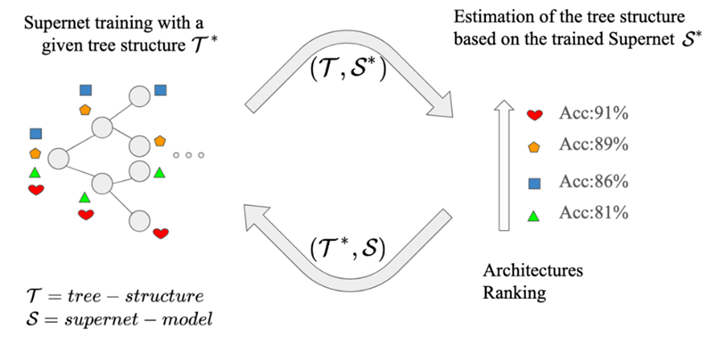

Our approach introduces an elegant solution: a continuous loop of refining the search process. Instead of using a static, pre-defined tree, the method iteratively refines the tree’s structure based on what it learns during the training process by the model (supernet). The process unfolds in a loop:

- Guided Training: The MCTS algorithm selects and trains a specific architecture from the tree using supernet S*.

- Performance Check: The chosen architecture’s performance is estimated. This provides a “reward” that helps update the search tree.

- Tree Reorganization: The search tree is reorganized to T* that prioritizes the best-performing architectures.

This approach means that good architectures are more likely to naturally emerge at the beginning of the tree, making the search more efficient. It avoids the need for a manually designed tree and learns the optimal search strategy as it goes, leading to a faster and more effective NAS process.

To validate our approach, we conducted experiments for image classification and semantic segmentation tasks. Results demonstrated that the iterative process is effective at guiding the search achieving competitive results with state-of-the-art methods. Furthermore, the experiments show that the method gradually increased the sampling rate for these top performers, verifying that it can successfully guide the search toward better solutions.

Conclusion

Iterative MCTS for NAS is a method that progressively refines the search space hierarchy based on observations from during the search. By iteratively updating the structure of the tree to favor high-accuracy architectures, the method efficiently guides the search toward promising solutions, leading to competitive performance on multiple tasks. This iterative process represents a promising direction for creating more efficient and flexible NAS methods in the future.

Comments are closed